How to Use Meta Tags for SEO: Ultimate Guide in 2022

Meta tags are snippets of code that provide search engines with valuable information about your web page. They tell the web browser how they should display it to the visitors and in the search results.

All web page has meta tags, but they are not visible on the web page. The contents of the meta tags are only visible in the HTML document.

In this guide, you will learn how to use, and not to use the meta tags for SEO.

Table of contents:

- What are Meta Tags?

- Why Meta Tags are Important in SEO?

- Types of Meta Tags for SEO.

- How do Google Understand Meta Tags?

- How to Optimize Meta Tags?

What are Meta Tags?

Meta tags are invisible tags that provide important information to search engines and visitors. They help search engines to understand what your content is about.

Meta tags are placed in the <head> of an HTML document, so they must be coded in your content management system. Meta-tags are a great way for website owners to provide information to all sorts of clients, and each system processes only the meta tags they understand and the rest of the tags are ignored.

Before we dive deep into the nitty-gritty of which meta tags to use, let’s talk about why they are so important for SEO.

Why Meta Tags are Important for SEO?

Meta tags offer more knowledge about your site’s content to search engines and website visitors. They are used to highlight the most important and unique elements of your content to make your site stand out in the crowd.

Search engines are user-centric and they prioritize a better user experience, and that includes ensuring your website satisfies every query asked by the user as fast as possible. Meta tags make sure that the information which the user wants to know about your website appears upfront in a concise and useful manner.

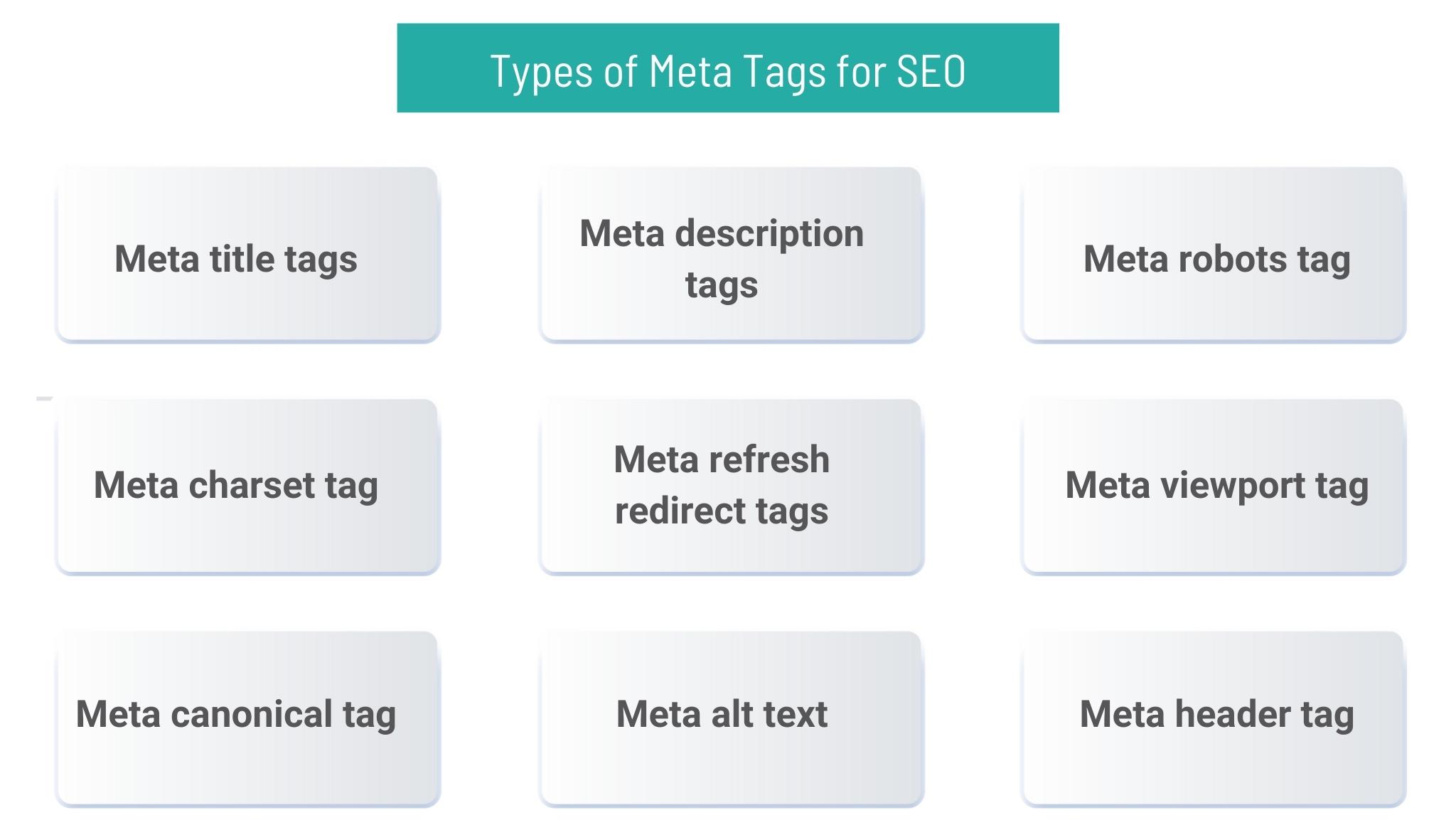

There are different types of meta tags having different roles, but not all are useful for SEO. Now that you know the importance of meta tags, let us see a full list of meta tags that are relevant for search engine optimization.

Types of Meta Tags for SEO

Here is the list of meta tags for SEO strategy:

-

- Meta title tags, to name your page on search engines.

- Meta description tags, to describe your web page on search engines.

- Meta robots tag, to index, or not index your page.

- Meta charset tag, to define the character encoding of the website.

- Meta refresh redirect tags, to send the user a new URL after some time, usually from a redirection.

- Meta viewport tag, to indicate how to render a page on mobile.

- Meta canonical tag, to prevent duplicate content penalty.

- Meta alt text, to provide a text alternative to images.

- Meta header tag, to provide headings.

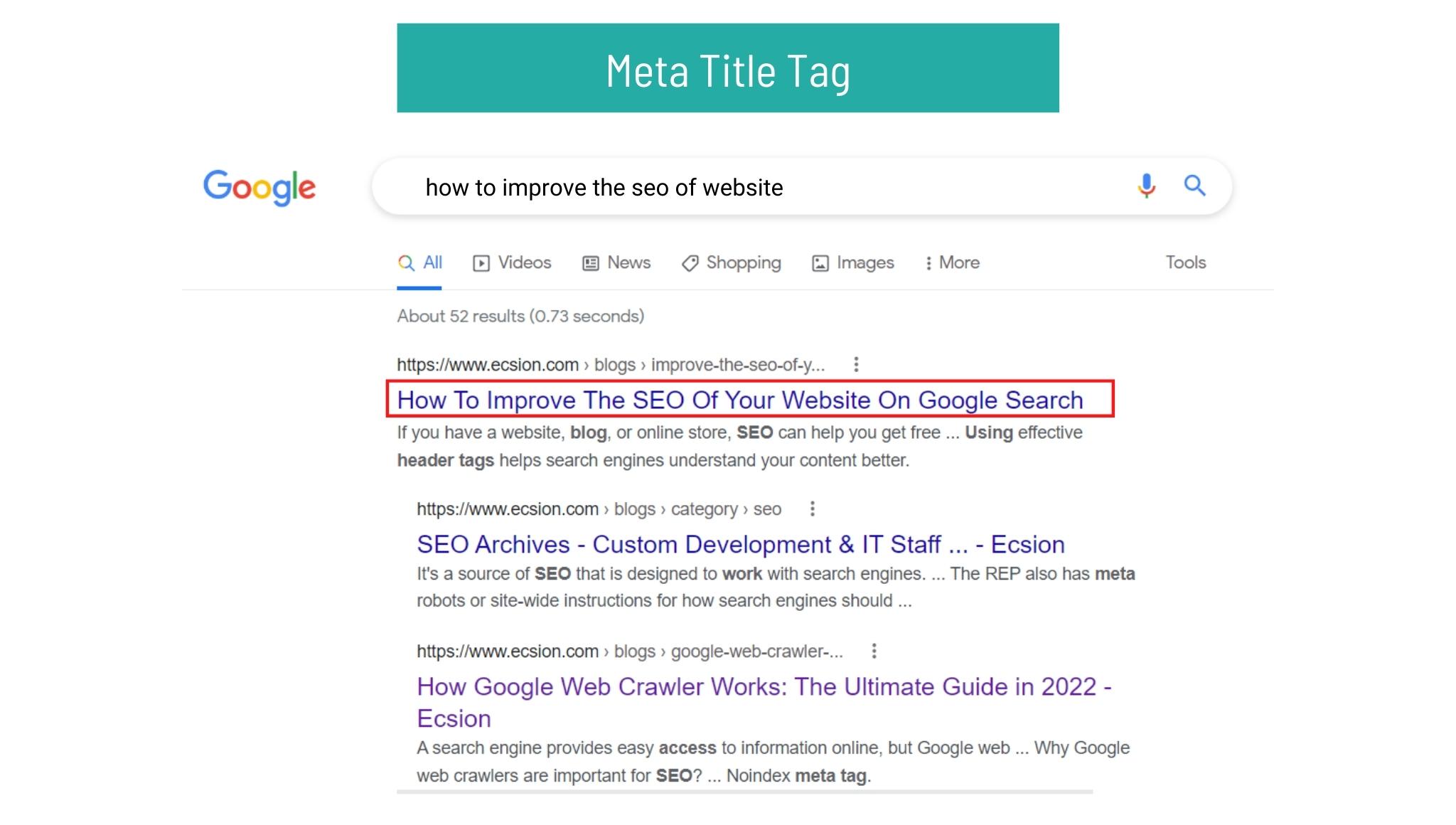

1. Meta Title Tag

The title tag is the first thing a user notices in the search results. Titles that appear in the SERPs give readers a quick insight into the content of the results. It’s the title that offers a preview of what your content is about. It is the primary piece of information that’s relevant to a user’s search query, and it helps them to decide which results to click on.

Your title tag is not just for the users, but also for the search engines that discover your content. So, it is important to write high-quality title tags for your web pages.

But, how to write a title tag?

It’s simple, copy-paste the code given below into the <head> section of your web page:

<head>

<title>this is the title of your page</title>

</head>

Here are a few best practices to use Title tags on your web pages:

-

- Craft a unique and perfect SEO title tag for each page;

- Be brief, but descriptive and clear;

- Avoid vague and generic titles;

- Write something click-worthy and impressive;

- Use your target keywords to improve results;

- Keep it under 55 characters;

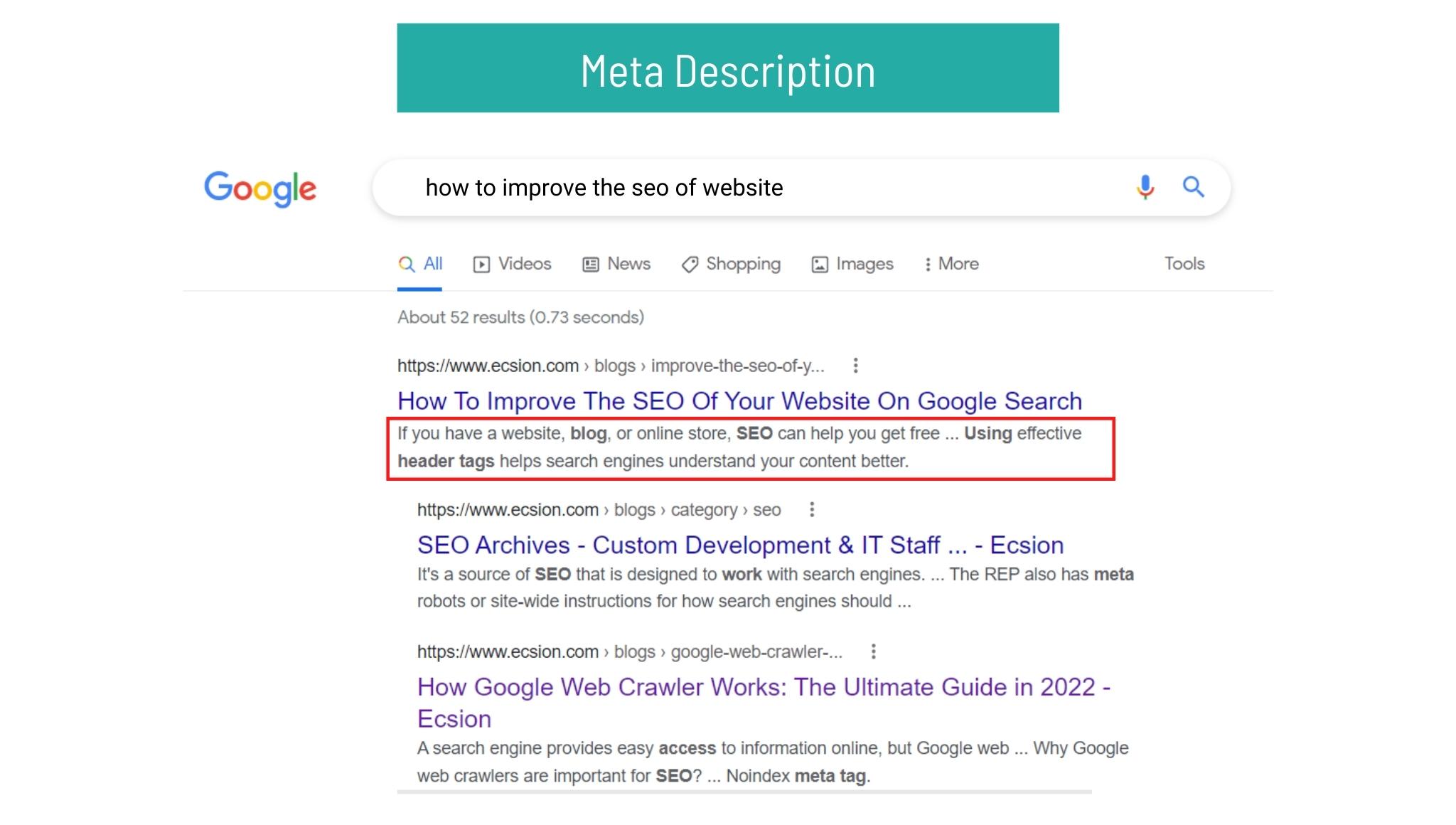

2. Meta Description

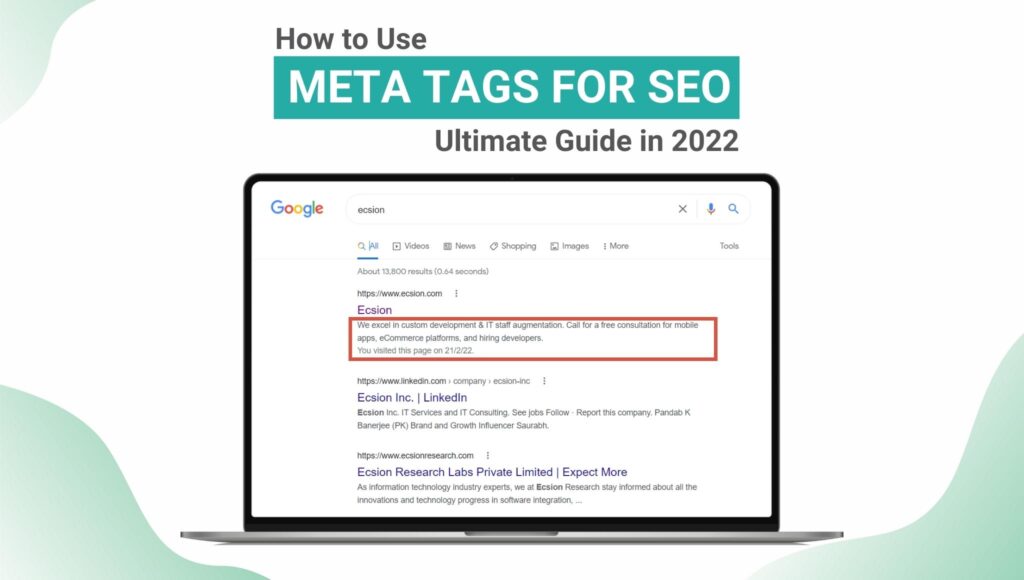

The meta description tag summarizes the page’s content. It is as important as the title tag. If the title tag is the title of your web page that appears on top of the search results, then the description tag is the snippet that is displayed underneath. They are like a pitch that interests and convinces the users that the page is exactly what they are looking for.

The meta description tag should provide a precise description of your page. Utilize this tag wisely and take more benefits of the opportunity to provide more details about your content. Make it appealing, descriptive, clear, and relevant.

You can code meta description tags manually in your site’s HTML.

An example is given below:

<head>

<meta name=”description” content=”Here is a precise description of my page.”>

</head>

Here are a few best practices to use meta description tags on your web pages:

-

- Write a unique description for each page;

- Summarize your content accurately;

- Avoid unclear descriptions;

- Provide relevant content;

- Make it perfect and appealing;

- Include keywords where it makes sense;

- Keep it under 160 characters;

- Avoid the use of duplicate meta descriptions across multiple pages;

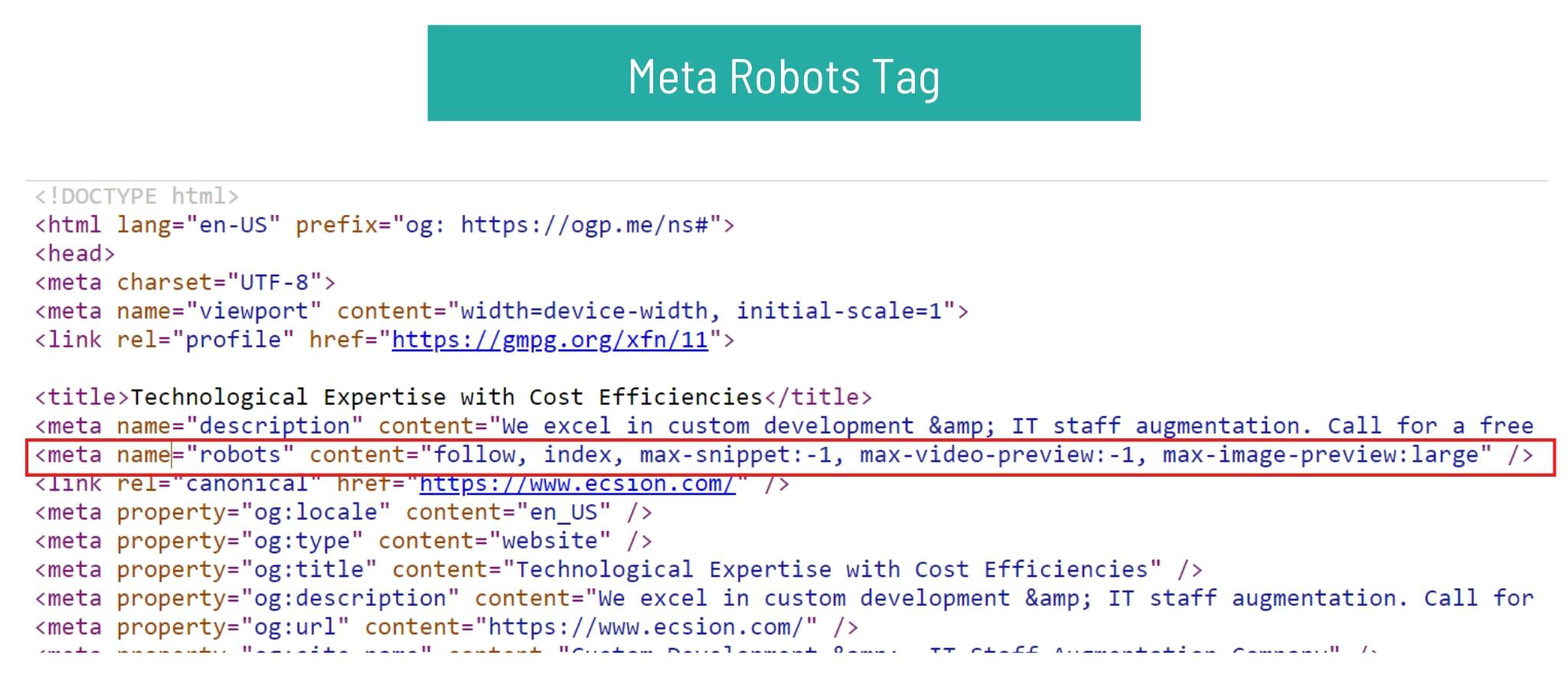

3. Meta robots tag

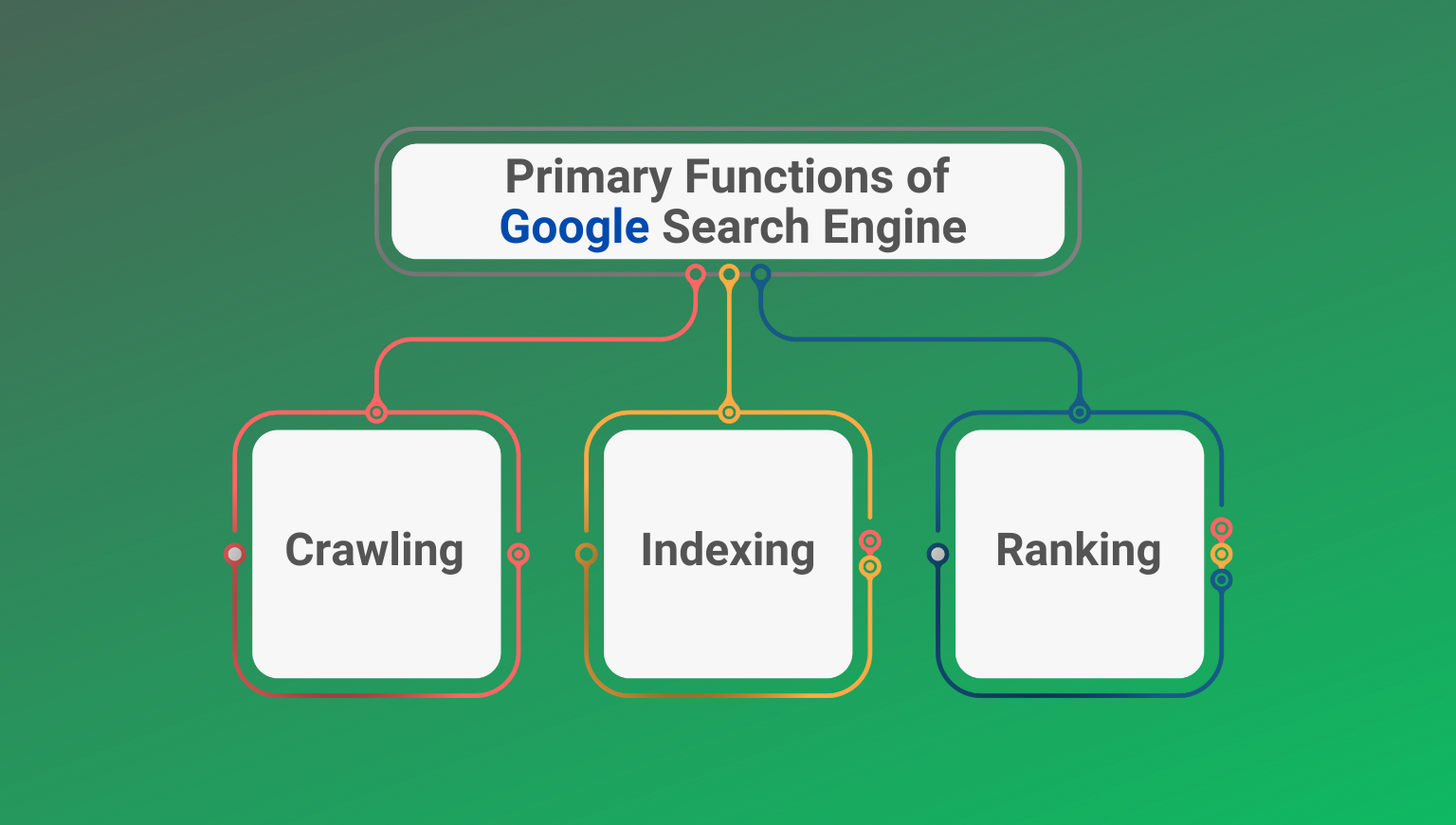

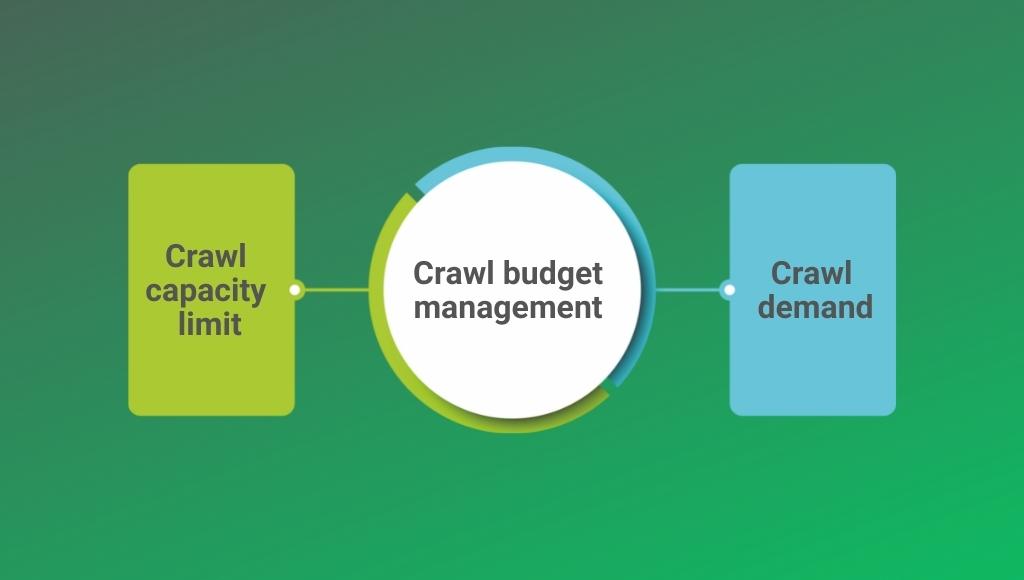

Robots meta tag tells search engines how to crawl web pages. Using the wrong robots meta tag can have a disastrous impact on your website’s presence in the search results. Your search optimization efforts rely on your understanding and utilizing this tag effectively. Meta robots tag informs search engines, which pages on your website can be indexed.

It serves the same purpose as robots.txt; it is used to prevent the search engines from indexing individual pages while the robots.txt file prevents it from indexing the whole site, or small sections of the site.

A robots meta tag that tells the search engines not to index a page looks like:

<meta name=”robots” content=”noindex, nofollow” />

A robots meta tag that tells the search engines index and follow a page looks like:

<meta name=”robots” content=”index, follow” />

A robots meta tag is written in the <head> section of the snippet which looks like this:

<!DOCTYPE html>

<html><head>

<meta name=”robots” content=”noindex” />

(…)

</head>

<body>(…)</body>

</html>

If the robots meta tag is not added in the code, then by default the search engine crawlers will index and follow your page. Robots meta tags are used to make sure that the search engine spiders process each page the way you want them to.

Here are a few best practices to use robots tags on your web pages:

-

- Utilize robots meta-tag when you want to restrict the way search engine crawls a page;

- Avoid blocking pages with meta robots tags in robots.txt;

- Avoid rogue meta noindex, it prevents Google from indexing the page and you will get no organic traffic;

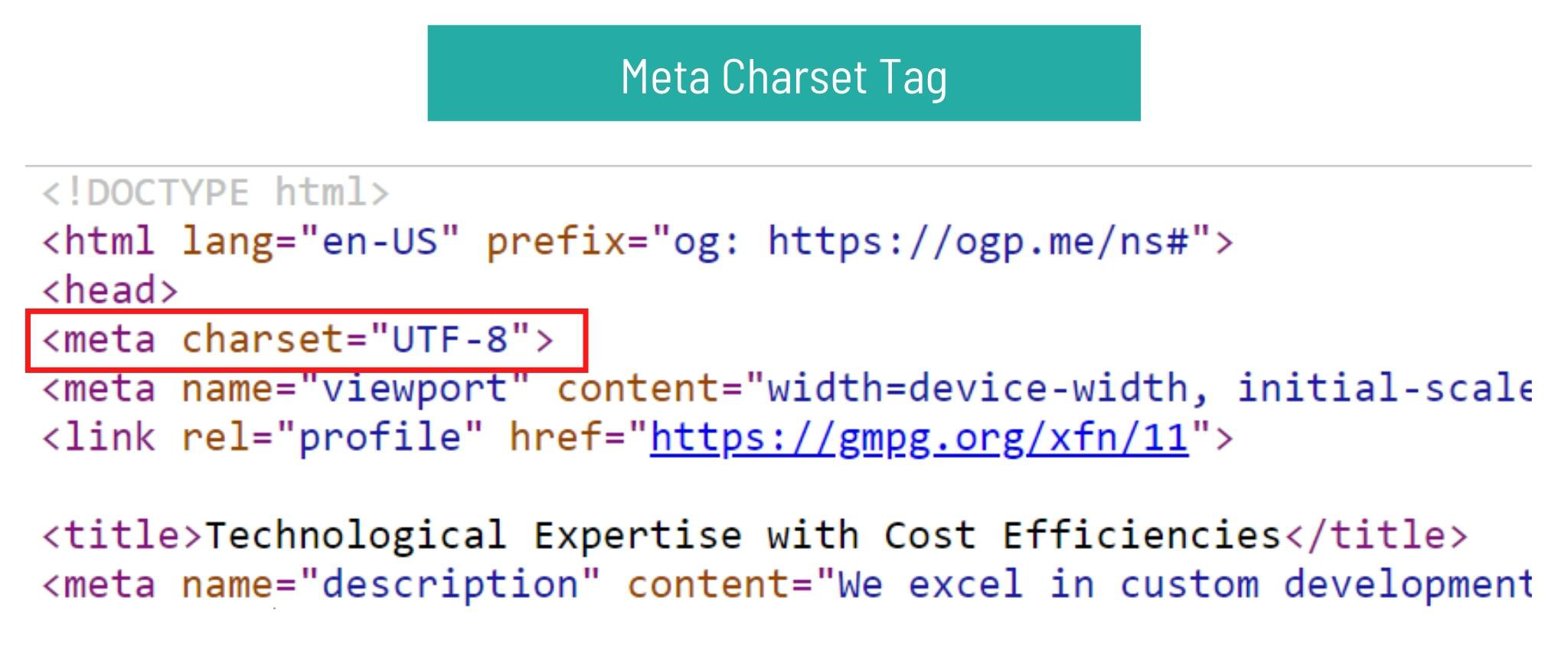

4. Meta charset tag

The charset tag sets the character encoding for the web page. It tells the web browser how the text on your web page should be displayed.

The two most common character sets are:

-

- UTF-8 – character encoding for Unicode;

- ISO-8859–1 — Character encoding for the Latin alphabet.

To add the meta charset tag paste the given code in the <head> section of your webpage:

<meta charset=”UTF-8”>

Here are a few best practices to use charset tags on your web pages:

-

- Use meta charset tag for each page;

- Use UTF-8 where it makes sense;

- Use correct syntax for HTML;

5. Meta refresh redirect tag

Refresh redirect tag is used to indicate the browser to redirect the user to a different URL after a set amount of time. Meta refresh redirect tags should not be used because they are not supported by all web browsers. They raise security concerns and confuse the users.

If you really need to add the refresh redirect tags, then paste the code given below in the <head> section of your webpage.

<meta http-equiv=”refresh” content=”5;url=https://example.com/”>

Here are a few best practices to use refresh redirect tags on your web pages:

-

- Avoid the use of meta refresh redirect tags unless it is absolutely necessary;

- Use a 301 redirect;

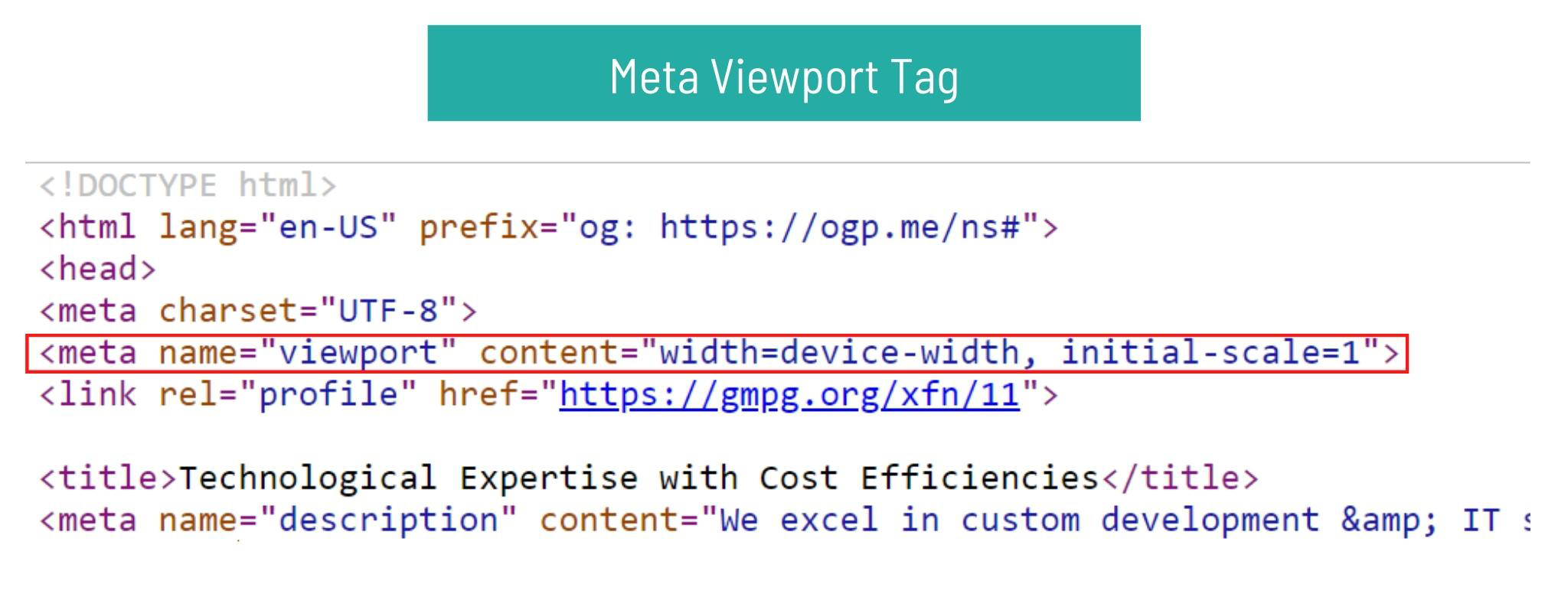

6. Meta viewport tag

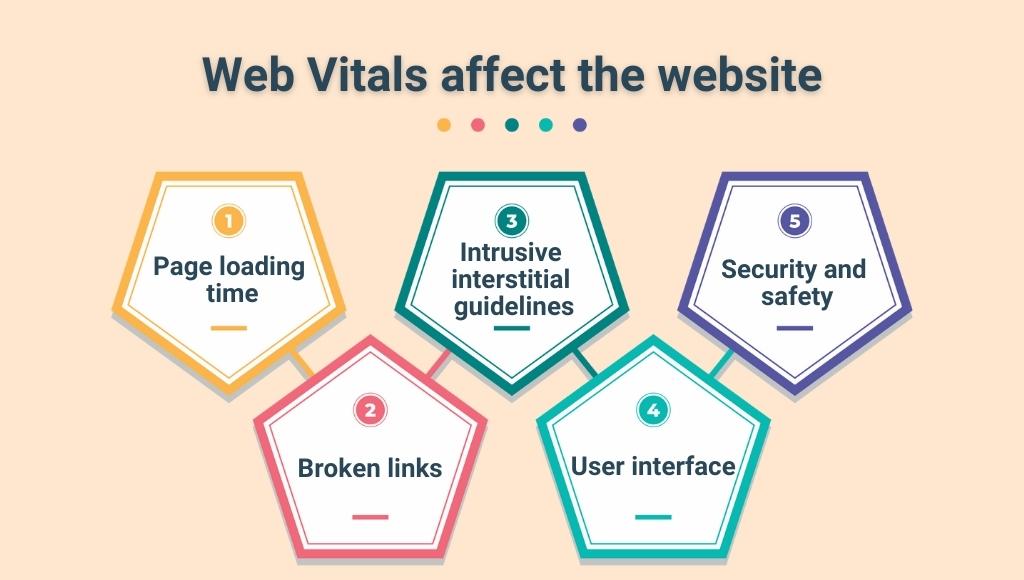

A viewport tag helps to set the visible area of a webpage. It instructs the browser on how to render the web page on different screen sizes. The presence of a meta viewport tag represents that the page is mobile-friendly. Search engines like Google rank mobile-friendly websites higher on SERPs.

Users will likely hit the back button if the desktop version of a page loads on a mobile device. It is annoying and makes things hard to read. This sends a negative signal to Google about your page.

A viewport tag is written in the <head> section of the HTML, and to add a viewport tag to your page paste the code given below into the <head> section:

<meta name=”viewport” content=”width=device-width, initial-scale=1.0”>

Here are a few best practices to use viewport tags on your web pages:

-

- Use meta viewport tags on each web page;

- Use the standard tag unless you know what you are doing;

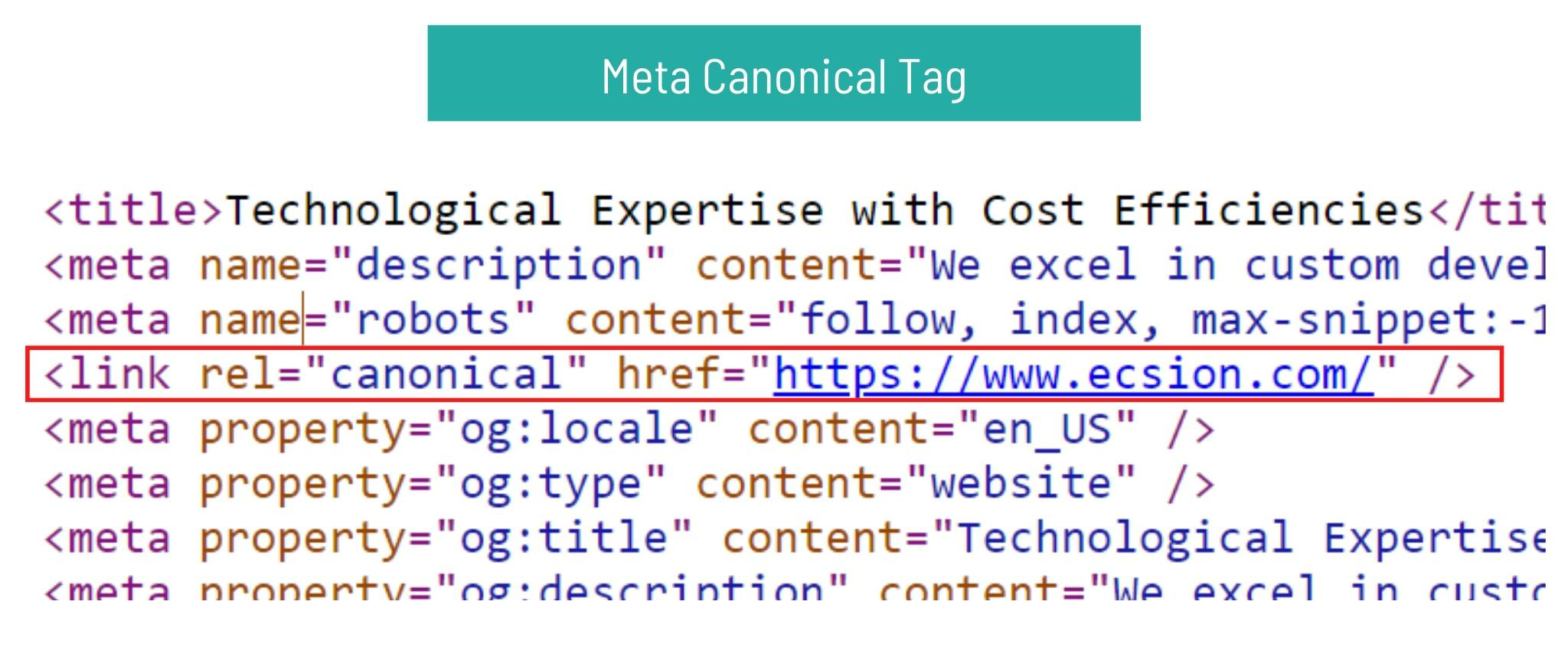

7. Meta canonical tag

If you have identical pages on your website, then you might want to inform the search engines which one to prioritize. You can do this without incurring a duplicate content penalty – as long as you use a canonical tag.

A canonical tag in HTML looks like this:

<link rel=”canonical” href=”http://example.com/” />

8. Meta alt text

An alt text tag also called an alt attribute is an HTML attribute applied to image tags to provide a text alternative for search engines. Image optimization has become very important for modern SEO strategy. Your image should be visible to both search engines and users.

Meta alt txt ensures both of these things: it offers a text alternative to images that will be displayed if the image doesn’t load. It also tells search engines like Google, what that image is meant to represent. Google places high value on the alt text tag. They are used to describe your visual content.

Image alt text can turn your images into hyperlinked search results by giving the site yet another way to receive organic traffic.

An alternative (alt) text tag is written as:

img src=”http://example.com/xyz.jpg” alt=””XYZ””

Here are a few best practices to use alt text tags on your web pages:

-

- Use informative file names;

- Keep it short, clear, and to the point;

- Use the right type of image;

- Keep it under 50-55 characters;

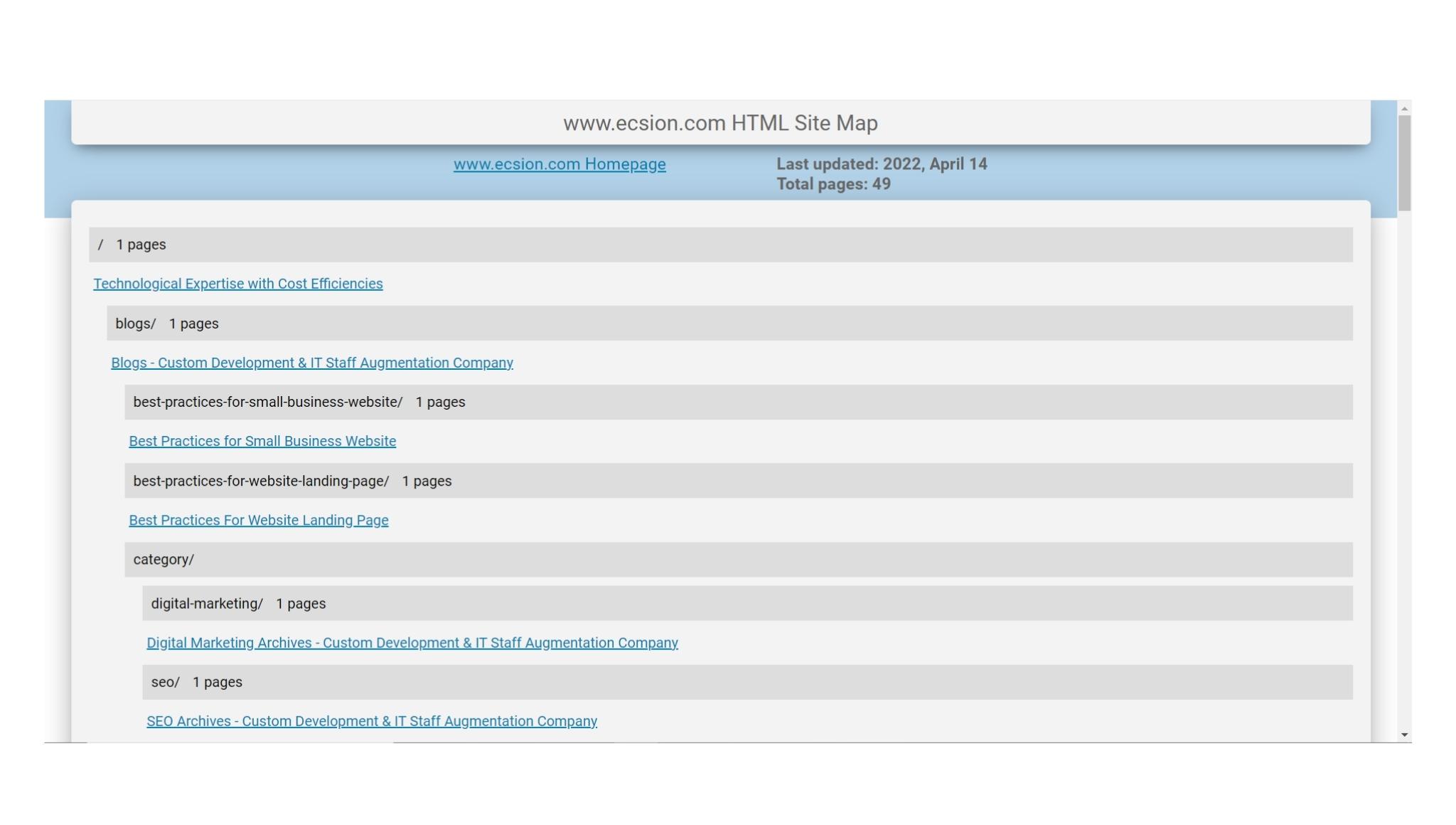

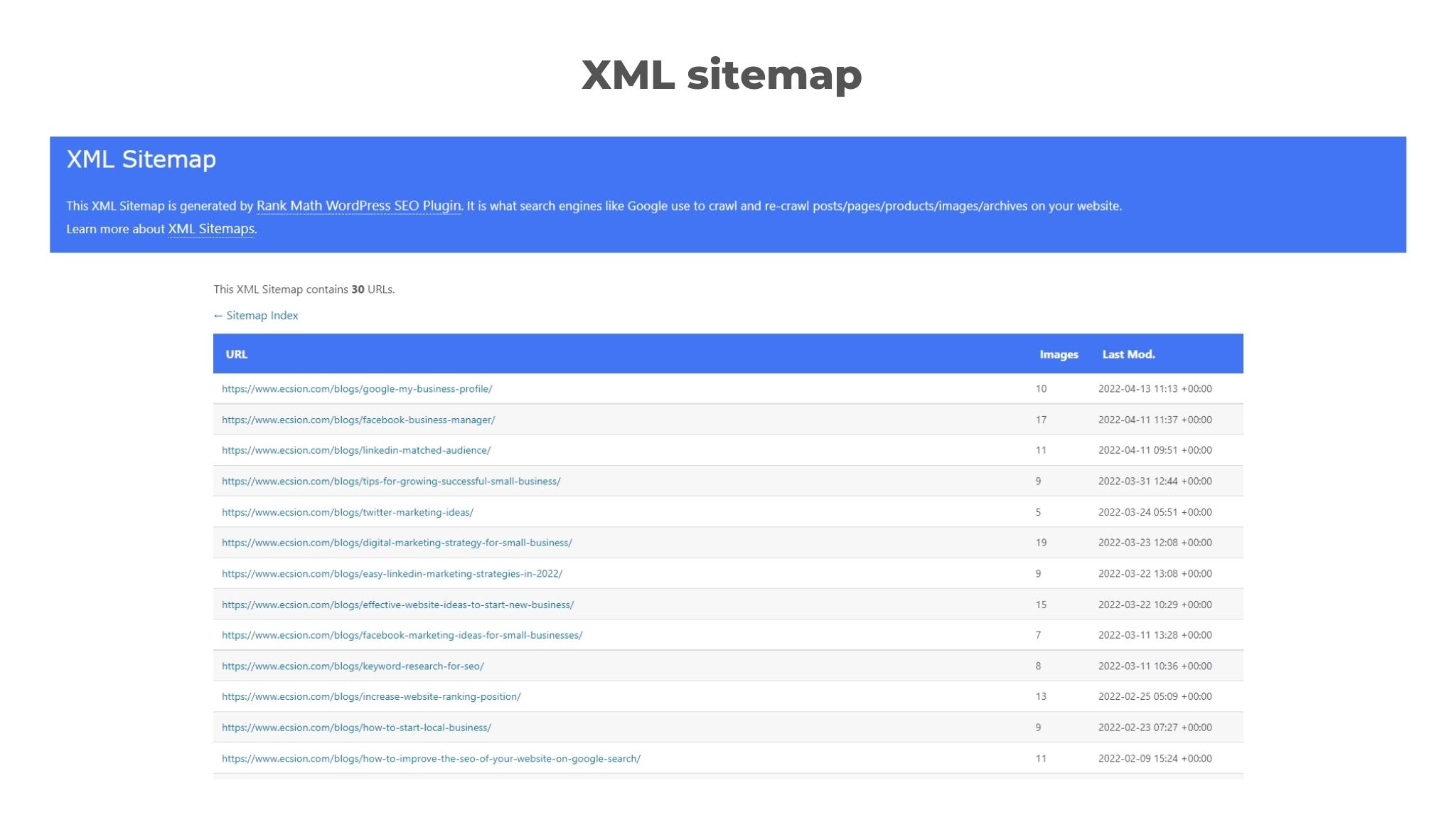

- Create an image sitemap;

- Use an optimal size without degrading its quality;

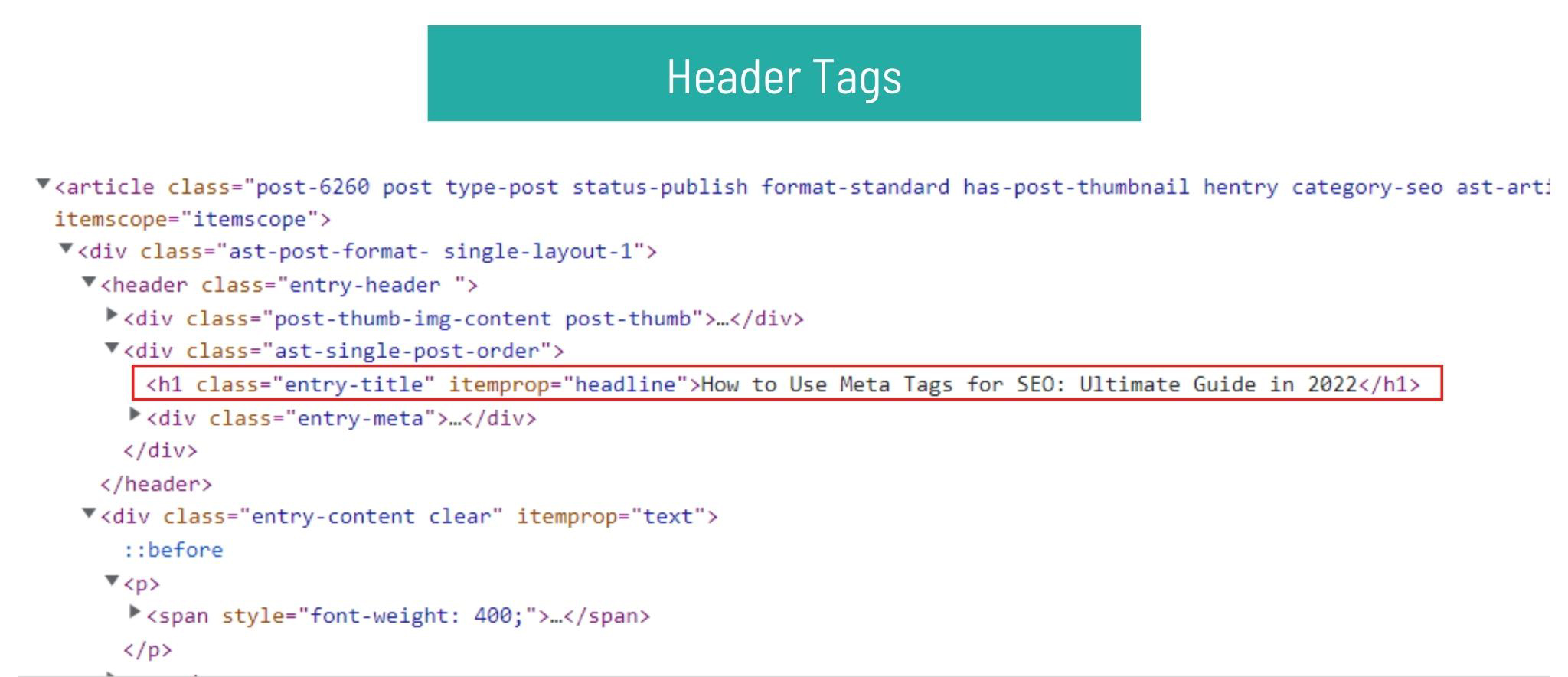

9. Header tags

Header tags are headings that are used to structure your page. They are the part of your content that improves user experience and ease of reading. The order of header tags high light the importance of each section, starting from h1 to h6.

The H1 tag denotes the title of the page and the h2 tag denotes the subheading of the page to break up your content.

It is usually suggested to use only one h1, while you can use more than one h2, and h3 tag.

Here’s an example of header tags:

<h1>a quick guide to meta tags in SEO</h1>

<p>paragraph</p>

<p>another paragraph</p>

.

.

.

<h3>1.title tag</h3>

How do Google Understand Meta Tags?

Meta tags that the Google search engine supports to control how your site will appear in Google searches are:

1. Page-level meta tags

These tags are the best way for website owners to provide Google with information about their websites. Meta tags are added to the <head> section of the HTML page which looks like this:

<!DOCTYPE html>

<html>

<head>

<meta charset=”utf-8″>

<meta name=”Description” CONTENT=”Author: A.N. Author, Illustrator: P. Picture, Category: Books, Price: £9.24, Length: 784 pages”>

<meta name=”google-site-verification” content=”+nxGUDJ4QpAZ5l9Bsjdi102tLVC21AIh5d1Nl23908vVuFHs34=”/>

<title>Example Books – high-quality used books for children</title>

<meta name=”robots” content=”noindex,nofollow”>

</head>

</html>

2. Inline directives

Independently of page-level meta tags, you can remove parts of the HTML page from the snippets of code. This can be done by adding the “data-nosnippet” attribute to one of the supported HTML tags:

-

- span

- div

- section

For example,

<p>

This text can be included in a snippet

<span data-nosnippet>and this part would not be shown</span>.

</p>

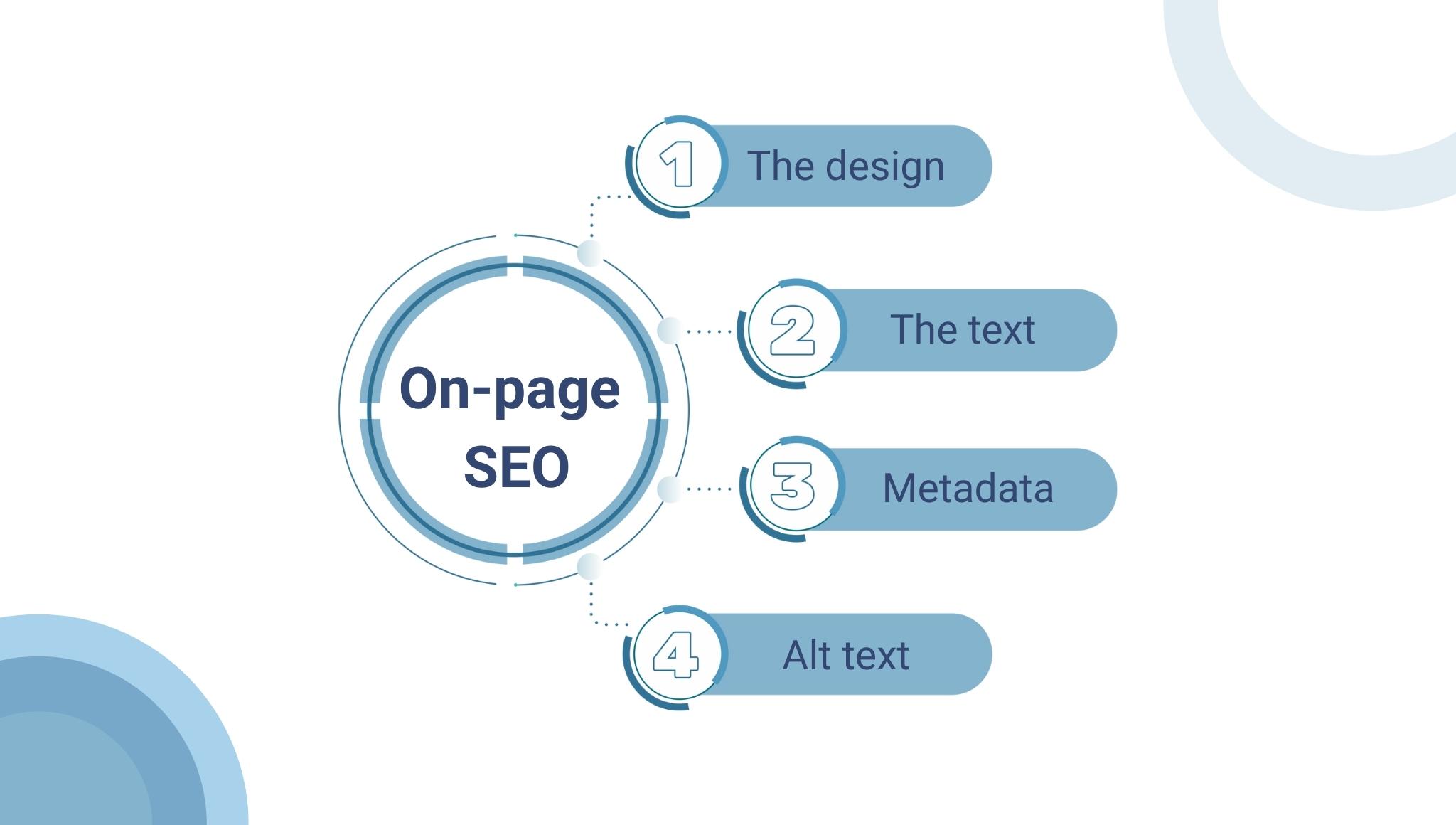

How to Optimize Meta Tags for SEO?

Meta tags can help search engines and users. It helps you improve the user experience and display your business information.

Here are a few ways to optimize your meta tags:

-

- Check whether all your pages have title tags and meta descriptions.

- Pay more attention to your headings.

- Markup your images with alt text.

- Use robots meta tags to guide search engines on how to access your content.

- Use canonical tags to avoid cannibalizing your own content with duplicate content.

Final thoughts:

Meta tags are not complicated. Understanding the meta tags above should be enough to prevent any significant SEO faux pas.

Looking to study more about meta tags?

Leave us a message in the comment box.